The performance of web applications is becoming critical due to the exponentially increased usage of the applications and growing competition in the market. Today, both IT and business stakeholders are more concerned about the application performance. Simulating real user’s behavior during the performance test becomes a vital factor in achieving desired performance test results.

40% of the enterprise projects report application performance bottlenecks and overutilization of hardware that leads to excess budget. The bottlenecks are due to a lack of proper test approach towards collecting performance requirements. The following pain points are currently being faced by most of the enterprises, knowingly or unknowingly:

- Performance test team conducting a test that is not in-line with the production peak user load. Due to this, the product might experience severe bottleneck when more users are accessing applications than the anticipated capacity.

- Overallocation of hardware: If current performance test results are not in line with the SLAs, then allocate more hardware, as it leads to spending more amount of money because of using an incorrect (higher) user load.

In order to mitigate these issues, performance tester should start working on creating a workload model.

Performance Testing always starts with the planning phase. Workload modelling is a critical step in the planning phase to derive the actual peak user load and the business scenarios/real user activity.

In-general, performance test team requests application users/owners with a set of questions to start it. Often, they do have the right information on the real production user activities. Testers end up spending a lot of time meeting with architects, business, and platform teams to understand their requirements. Understanding their needs is a time-consuming process, which can be avoided with better requirement processes.

In the end, tester derives the requirements based on the discussions, but not from any approach/tool which is integrated with production. Hence, it recommended starting with a test approach to derive the workload modelling based on the web server logs.

Every performance tester should follow below pointers to design the workload model accurately:

- Mimic the real user/transaction load in production with exact caching and think time behavior

- Derive the geographical distribution across the globe and usage of browsers

- Understand the business steps and end-user business flow

- Get the basic understanding from the business on the critical transactions being used in real-time

How Log Analyzer Works

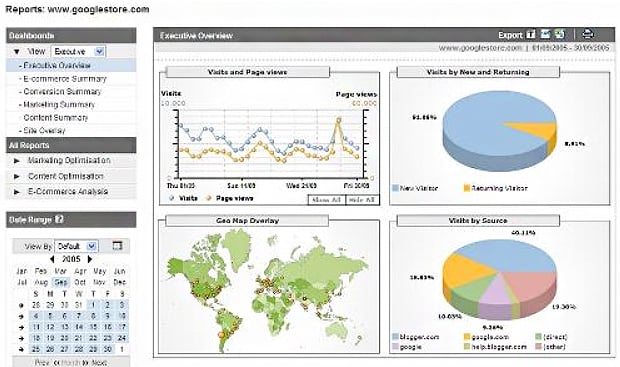

Server log analyzers and web analytics tools can be used to accurately model the workload. Log analyzer tool parses web server log files and derives the user behavior based on the location, browser, actions, and the time spent by the visitor on the webpage. Also, the tool integrates with browser components like JS (JavaScript), cookies, etc. to mimic the end-user behavior successfully.

Sample Dashboard

(Image Courtesy: Google Store)

Workload Modelling Approach

Below is the six steps approach to derive the statistics irrespective of any tool used.

- Get the last six months to 1-year web server logs from production based on the nature of the AUT

- Feed this data to log analyzer tool to extract required stats

- Identify peak day/week and drill down into daily statistics

- Identify peak hour

- Derive business flows

- Derive Workload

To retrieve stats from the logs, one can use any of the opensource log analyzer tools such as WebLog Expert, Web Log Analyzer, Elastic Stack, Nagios, Graylog, etc. Feed these logs to these tools and extract required stats like Peak Day/Week, Peak Hour, Business Flows, and Workload.

Peak Usage is measured in terms of active users. Peak Hour is that hour of the business when most of the sales occurred or most of the visitors visited.

User needs to focus on page views, unique page views, average time spent on each page, bounce rate, and exit rate. Also, review how the content is performing by page URLs, titles, search terms, or events. Based on these, derive the business scenario.

Now we got the business scenarios, and the next step is to calculate the number of users based on the SLA’s of each transaction, including Think Time and pacing to achieve desired peak load users.

Below is a Sample User Load Calculation

|

Scenario Name |

Single Iteration Duration (Minutes) |

Hourly Iterations 60/1st Column – (Each user can finish this number of transactions per hour) |

User Iteration (Hour) Total Transactions Generated per Hour as shown in respective business flow |

Total Users = Number of Total Transactions / Hourly each user transaction |

Vuser Including Variance Total Users + 20% |

|---|---|---|---|---|---|

|

List of Subscriptions |

2.00 |

30.00 |

39 |

1 |

1 |

|

Add/Edit Subscriptions |

3.00 |

20.00 |

66 |

3 |

4 |

|

Add/Edit Invoice |

3.00 |

20.00 |

78 |

4 |

5 |

|

View Invoices |

2.00 |

30.00 |

67 |

2 |

2 |

|

Subscription Reports |

3.00 |

20.00 |

10 |

1 |

1 |

|

Main Reports |

4.00 |

15.00 |

40 |

3 |

4 |

|

Billing Contacts |

4.00 |

15.00 |

30 |

2 |

2 |

|

|

|

|

330 |

|

19 |

Based on the above peak user data (x), calculate and derive mandatory tests like Performance Test (0.5x), Load Test (1x), Stress Test (1.5x, 2x, 2.5x, etc.) and longevity test (0.75x).

|

Test Type |

# Users |

Planned Sessions To Simulates |

|---|---|---|

|

Performance Test |

9.5 |

165 |

|

Load Test |

19 |

330 |

|

Stress Test |

38 |

660 |

|

Longevity Test |

14 |

1485 |

A realistic workload model is the core of a reliable performance test. Hence, it’s imperative to analyze the traffic and application to generate a workload model for creating performance testing methodology.

Innominds performance TCoE has performed multiple engagements for many enterprise applications, helping customers in their capacity planning, identifying bottlenecks, baselining products and scaling applications. Innominds is a pioneer in handling the intricate web, mobile apps in both on-premise & cloud.

Author Bio: Sridhar Vangapandu is a Associate Manager - Quality Engineering at Innominds and leads the Performance COE practice. He comes with 14 years of expertise in Performance, Functional, Process & Management, with experience in implementing global projects in Fortune 500 companies. He is awarded with Six Sigma Green Belt, Lean Bronze and is a Certified Scrum Master. You can reach out at svangapandu@innominds.com for any advisory on the topic of Enterprise Performance Testing and Quality Engineering.