Artificial Intelligence and Machine Learning (ML) are the next steps to digital evolution that are redefining technology across the testing landscape. In terms of application development, there are newer requirements and trends such as Rapid Application Development (RAD) and Continuous Integration (CI) that are pushing the Quality Assurance teams to include automation at every step of the testing process. When testing engineers befriend the bots and utilize their capabilities to assist the internal processes, so far as to allow creativity and business development, the products can stand out in the respective markets.

Product development success depends inherently on testing success. Testing measures the product’s resiliency and intelligent test automations are used to implement DevOps principles that encourage both the development and operations to work in coordination with each other. During the DevOps lifecycle, tests are run more frequently and in between the development cycles to complement the operations processes. A constant information hand over between the development and operations teams is deemed necessary and integral to bringing out the best from the engineering team. Inculcating the DevOps culture in testing is proving beneficial to long-term product sustenance.

According to Gartner, by 2024, three-quarters of large enterprises will be using AI-enabled test automation tools that support continuous testing across the different stages of the DevOps life cycle.

Testing is not only meant to build resistance and durability of the product and its features but it can also make new discoveries on the way to make the app development more innovative. It is meant to transform the entire product life cycle.

Such expectations place an importance in creating the least resistance for the QE teams to adopt the latest and ‘greatest’ technologies such as AI and ML.

Driving innovation along with stable application deployments

Involving AI and ML in QE and testing implies predefining the steps in routine testing procedures, integrating automated processes into every possible corner of the entire development cycle and creating models that can make new findings. That is not to say that all implementations of ML in testing can achieve these outcomes in an instant or from the start.

A smooth sailing testing operation is achieved as a result of making more subtle and smaller changes at the beginning and then progressing towards the major and more mainstream expectations. As testing becomes more and more mature, the test automations can take over larger parts of the pipeline, making way for taking up bigger and more challenging tasks.

Utilizing the potential for innovation through R&D can make testers decision makers. Based on the regular testing parameters, the ML models are trained to run routine tests before the testing engineer takes over to perform a more streamlined testing for further product fine tuning. They are closely involved in training the machine/model to recognize common errors and rectify them. By encouraging the team’s independence from being stuck in the debugging rut, they can create better test scripts and suggest improvements to the app. This enforces the roles of testers as more capable of taking decisions to make the product a better success in the market.

Overall, the capabilities of an optimized testing pipeline with integrated automation could be summarized under maintenance, scalability and accuracy.

1. Maintenance:

As with any product, changes get implemented from time to time as part of making improvements to the app that are in tune with the changing consumer demands. However, testing may not be integrated neatly into all the phases of development or they might not be optimized to maintain the code for the right results.

The problem of maintenance has caused businesses to drop off from projects and not take up mature initiatives since their teams had the lion’s share in the debugging process. Automations that can respond well to the regular changes to different application components can in turn improve the pace of delivery. This is not always an easy task for a newbie since one single change could impact layers of work and identifying and training the testing system could take a long while. However, starting with some basic tests and progressing towards more high-value tests leads to optimized testing in the long run.

Trying to achieve maintenance automation for complex test scenarios could also backfire. The effect of such an achievement might be monumental but the risks are potentially destructive to the whole product.

The key here is to test for easy, independent and the most incidental parts of the program and then take up additional testing objectives as you progress.

2. Scalability:

When testing requirements exceed the time spent in actual development, there is no space to innovate or expand in your business through advanced feature releases or additional production. The ability to scale can come from a system of development that works well under pressure and can implement changes rapidly. However, the automations need to be specific and intentional.

Identifying the most necessary aspects to focus on for each project’s delivery and choosing to serve only those aspects in line with the customer expectations will create a spike in the application’s market value quicker.

One such quick way to implement testing is to test only across configurations that are most common and prevalent to your particular customer base. Spending time on ‘extra’ testing can cause you to drag your deployments, unnecessarily.

Another method to achieve scalability that is also one of the most overlooked testing strategies is keeping the tests independent from each other and really small, i.e., granular. It may be easier to share test data and configurations for multiple tests, but it could cause a larger disruption later as the product becomes larger. By breaking them down, the automated tests do not disrupt large portions of the product’s functionalities. There are many more ways in which granularity can assist testers to act faster but they primarily reduce risks and also test much better.

3. Accuracy:

Test accuracy is the measure of how well a test can bring out the expected results while reducing the negative outcomes to the maximum. Tests are generally carried out with different sets of data, configurations or combinations to get the best result. This could mean a larger set of data, or better labelled data in order to surpass the problem of ‘dirty’ data. Especially in the case of test automations, the nature of data matters the most since there is no human interpretation or corrections while the test is running. But test automations have come a long way from its initial glitchy phases.

Increased test accuracy can be attributed purely to the superhuman capabilities of AI and machine learning. The number of calculations and the amount of computation that was unachievable in human hands are now a breeze with AI systems. Therefore, testing systems can be optimized to test across larger sets of data, for a longer time, and at a faster rate. It is no wonder then to see automated tests radically improve in their accuracy.

In short, the implementation of advanced automation through machine learning can definitely boost your development and production in addition to leaving room for the operations team to breathe. They are also free to experiment and innovate newer concepts of testing and bring them to the table.

Lowering business down time with automated self-healing systems

Reducing the down times in any service delivery attracts more customers and also increases business outcomes in terms of revenue and recognition. The time taken to resolve service failures and bug corrections impacts business success and productivity. The issue has led to the introduction of a virtually self-healing concept for testing applications without affecting business hours.

Self-healing systems are advantageous to any product as they buy time for the engineers to make appropriate changes and bring the system back to normalcy. This may be a direct implication of an intentional change in a component or function, etc., or an unintentional interruption due to the various supporting services of a software. This happens more often than not, as an essential part of any ongoing application development.

Traditional methods of fixing these glitches are latent and based on static and rigid methodologies. They allow very little flexibility and cannot work without close monitoring. But the development of a self-healing system can check for events that disrupt the application’s functions, by ‘mimicking’ a previous functioning state and temporarily resolve an otherwise destructive situation.

Dynamically changing tests to adapt to new changes by matching them with a previously well-functioning object in the application is what self-healing essentially does. They can constantly monitor the systems for log information, network stability, database updates, and the overall application performance. Such self-managing systems have worked enormously for businesses in growing and mending customer-service relationships as they prevent delayed servicing and prolonged deliveries.

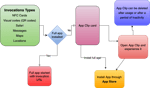

The operations engineers can utilize AI’s natural language processing (NLP) capabilities to interpret complex changes, or tell the AI system what to do when there is a change. The automated system can take care of unprecedented incidents outside of business hours and give reports to analyse issues after they have happened. This will in turn help the QE team to reinvent the wheel and improve the self-healing mechanism even further in the future.

Market analysis and faster customer feedback loops

From effectively implementing test automations, businesses can make quality inferences that can support further application improvement. Test report generations, monitoring and analysis can be converted to insights on how the test bots can be leveraged to get better results. They can also be compared to the overall market strategy for the product. This largely depends on customer responses.

Customer feedback integrations into the product prototypes during the initial phases through the beta testing phase and finally for newer upgrades really creates the difference in making or breaking a product. Product and feature launches are based on these feedback cycles and injecting changes to features as regularly as possible.

Test automations and bots are being equipped, as time goes, to find out potential failures beforehand. By making progress based on feedback, the product adapts to market changes and also become more competitive. Since the general consumer lacks the patience to wait for an upgrade, slow progress could lead away some of the engagement to other vendors. A great alternative is deploying test bots to take over the mundane activities and hop onto the innovation bandwagon, thus stumping competition.

Digital transformation in companies come from a continuous effort towards meeting customer needs and reducing costs. The changes you make to your business model can form ripples across the entire business format for the better.

iHarmonyTM is Innominds’ AI-led QA platform and accelerator that can cater to all your organization’s testing needs. Our clients report up to 80% reduction in testing costs and 15% faster release cycle through its implementation.

Looking to leverage AI-driven test automations for your business? Talk to us: https://www.innominds.com/quality-engineering