When it comes to performance testing, discussions with project teams can often get heated – in a good way!

- Can performance testing be conducted early in the lifecycle?

- Can it be seamlessly integrated with other tests as part of the build process, providing instant feedback?

- Can the entire performance test suite be maintained as code and easily port across different environments?

Organizations and project teams are increasingly recognizing the importance of performance testing and expect it to be an integral part of the DevOps lifecycle. They realize that, by conducting performance testing early in the lifecycle, performance issues can be proactively identified and addressed before they become more costly and challenging to resolve. That is a win for everyone!

Seamless integration of performance testing with other tests as part of the build process is crucial. This integration allows for immediate feedback on the performance impact of code changes, enabling teams to identify and rectify performance regressions quickly.

Another valuable aspect is the ability to maintain the performance test suite as code. Treating performance tests as code makes it easier to version control, collaborate, and automate the execution of tests. This approach also facilitates portability, enabling the performance test suite to be easily adapted and executed across different environments.

This article highlights Innominds recent experience in implementing a performance-testing solution for a prominent Retail organization operating in the Supply Chain domain. The organization was significantly transforming their core platform, migrating to a modern Cloud-based solution.

In implementing the performance testing solution, we prioritized the following additional key themes:

Leveraging modern DevOps tools: To streamline and optimize the performance testing processes, we utilized various modern DevOps tools. Tools such as GitLab facilitated version control and collaboration, Docker and Docker Compose enabled efficient containerization of the testing environment, and JMeter provided a robust testing framework. By leveraging these tools, we enhanced efficiency, scalability, and collaboration within the performance testing activities.

Automation First, Automation Everywhere: Automation was pivotal throughout the performance testing solution. We adopted an "automation first, automation everywhere" approach, automating as many aspects of the performance testing process as possible. From test script creation to test data generation, test execution, and result analysis, automation was integrated into every step. This approach reduced manual effort, minimized human error, and allowed for repeatability and consistency in performance testing activities.

Maintain as Code: The benefits of maintaining the entire performance testing solution as code are clear. These included scripts, test data, tools, design, and configuration. We ensured version control, traceability, and easier collaboration by treating these elements as code. Additionally, it facilitated the scalability and portability of the performance testing solution across different environments.

Optimize Cost: Cost optimization was crucial in our performance testing solution. To minimize costs, we implemented dynamic provisioning of test tool infrastructure that remained active only during actual test runs. This approach prevented unnecessary resource allocation and reduced operational expenses. By optimizing costs, we ensured efficient resource utilization without compromising the effectiveness of the performance testing activities. This resulted in an overall Load generation Infrastructure cost of 90%.

By embracing these key themes and implementing the performance testing solution with a focus on leveraging modern DevOps tools, and automation, maintaining everything as code, and optimizing costs, we were able to deliver a robust and efficient performance testing solution for the Retail organization's Supply Chain transformation project.

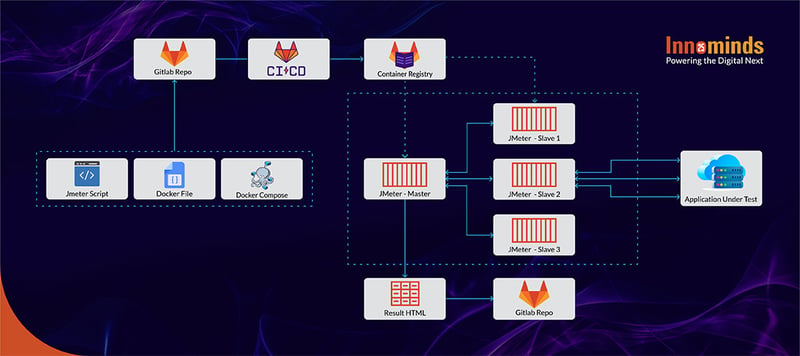

The solution implemented for performance testing in the Retail organization's Supply Chain transformation project involved the following steps:

Create JMeter Scripts: JMeter, an open-source load testing tool, created test scripts that replicated real-world user actions. These scripts captured critical and frequently used workflows and were enhanced to simulate varying workloads and configurations.

Maintain Scripts & Tooling in GitLab Repository: A dedicated GitLab repository was established to facilitate collaboration and version control. This repository was a centralized location for storing and managing JMeter scripts and their dependencies, such as test data files. Additionally, a Docker Compose file was maintained in the repository to define and manage the desired number of JMeter secondary instances, ensuring the scalability of the testing infrastructure.

Add a Build Step in GitLab CI: The test execution was automated within the DevOps pipeline by configuring GitLab CI/CD. JMeter scripts were executed automatically on code commits or trigger events using GitLab Runners. The test execution instructions were embedded within the pipeline, and Docker images were maintained.

Create Docker Images: The entire tool setup and provisioning were maintained as Docker images in the GitLab Repository. These images included configurations for the JMeter master (driver) and JMeter slave machines (load generators). Bundled alongside were the necessary plugins for distributed load testing using JMeter. Storing the images in the repository ensured version control and easy accessibility for the team.

Configure Docker Compose: Docker Compose defined the configuration required to dynamically create the load generation environment during the test run. It included specifications for networking, storage, and other requirements to provide the desired number of slave containers accurately.

Spin-Up Containers: Docker CLI and Docker Compose facilitated the dynamic spin-up of JMeter master and slave containers as part of the build step. The Docker Compose file, with the specified number of slave containers, ensured the proper provisioning of the load testing environment. This dynamic approach optimized infrastructure usage and resulted in cost savings by only utilizing resources when needed.

Executing Load Tests and Saving Results: Once the containers were up and running, the test run was triggered. The JMeter master container coordinated the test execution across the slave containers, simulating the desired load profiles based on the defined number of slave containers. The master container automatically captured and collated results, including relevant metrics and data. The results were then stored in the centralized GitLab repository for analysis and continuous improvement.

As you can see, a well-thought-out approach and model can successfully integrate performance testing into the DevOps pipeline. This results in cost savings and efficient management of the load-testing infrastructure, which in turn delivers a greater ROI and ensure greater speed and scale.