Deploying applications have always been a source of struggles and challenges for development teams everywhere. Then, when you add loads of data and machine learning mechanisms to the mix, you have full-blown chaos at your hand. There is no well-built infrastructure or methodology that can simplify the development and deployment of data-intensive applications. Considering the importance of Machine Learning (ML) and Artificial Intelligence (AI) in today’s age, it is extremely frustrating for organizations to adapt to newer technologies without a proper methodology for deploying applications built with machine learning capabilities. This proves to be a huge hindrance in reaping the benefits of AI/ML technologies.

Given the potential benefits, it's no surprise that organizations that have invested in cloud-based machine learning are looking at MLOps to enable, monitor, and improve their models. Although it is still a nascent area, the global MLOps industry is predicted to grow to about $4 billion by 2025, from $350 million in 2019.

Given the potential benefits, it's no surprise that organizations that have invested in cloud-based machine learning are looking at MLOps to enable, monitor, and improve their models. Although it is still a nascent area, the global MLOps industry is predicted to grow to about $4 billion by 2025, from $350 million in 2019.

There have been a lot of conversations around building tools for machine learning applications. Thus, emerged a new category – MLOps. There is not a specific definition for this term but it echoes the ideologies of DevOps. In a way, it can be considered that MLOps is DevOps for data-intensive applications. It is a methodology with which one can streamline the process of moving your data-heavy applications from development to robust production deployments. This method of streamlining deployments by adopting well-defined modern processes, automated workflows, and tools has worked wonders for all the other applications. So, it is obvious to assume that it will work for AI-ML applications.

Let’s ponder over the question – Why do AI/ML applications require special treatment? Why can’t they be deployed with the ever-efficient DevOps methodology?

Why data makes deployment a challenge?

ML applications are pretty similar to any usual application in terms of infrastructure. If you ask an engineer how an ML-powered application is operated in production, he will show you containers, cloud infrastructures, and operational dashboards. Considering that these applications run on the same dependencies and infrastructure, yet software engineers face a lot of pains when it comes to AI/ML-powered applications.

The reason is the size and complexity of real-world data repositories in an AI/ML-powered application. Data repositories are too complex and messy to be modeled and deployed manually.

These characteristics of an ML application change the fundamentals of handling the application:

- AI/ML applications are directly exposed to the ever-changing world of technology in real-time. The data inputs constantly keep varying and updating and thereby, the functioning of the application also keeps changing. Whereas, a traditional application operates in a static abstract world created and controlled by the developer.

- The amount of experimentation involved in an AI/ML application is a lot. There are multiple experimentation cycles with constant exposure to data that determine the behavior of the application while the behavior of a traditional application is well-defined through logical reasoning.

- The skillset, experience, and methodologies of people involved in building an AI/ML application are entirely different from a traditional software development team. AI/ML engineers are skilled in empirical science along with traditional software engineering skills. This renders all the DevOps practices ineffective.

The situation at hand is new for all. All the decade-long practices that were efficient in all aspects of software development lead to clutter and chaos during any AI/ML initiative. To make AI/ML applications production-ready right from the start, developers require a new set of standards to adhere to. That is where the concept of MLOps steps in.

The MLOps Infrastructure

Any AI/ML application has 4 specific requirements that need to be taken into consideration while designing an MLOps infrastructure:

- The scale of the application: AI/ML applications are data-centric applications and the magnitude of data is far larger than old-age data-centric applications. Along with that, the deep learning models require a lot of coding and intricate processing of all the data. Thus, the code of these applications as well as processing needs are higher too.

- Deep integrations with surrounding business systems: As the application’s behavior is determined by data, it requires to be integrated with all the supporting business applications to collect real-time accurate data. This is the only way an AI/ML application can be tested and validated.

- Robust versioning of data, models, and code: AI/ML applications are extremely complicated as they have real-time data deeply entwined within the code and the data models. Without a proper versioning system, there is no way to figure out why any part of the application is malfunctioning and where the deployment went wrong. You will require a solution similar to Git, but on steroids to handle this.

- Careful and specific orchestration requirements: The orchestration of a machine learning-powered application is the most complex of all. The team requires to plan and execute multiple interconnected steps diligently without any scope for human error.

Keeping in mind all these requirements of AI/ML application, now consider what an MLOps infrastructure will constitute. To put it simply, we need an infrastructure that allows the results of data-centric programming, and data models to be deployed to a modern production infrastructure that can sustain an application of that scale. Just like how DevOps practices enable reliable and continuous deployment of traditional software artifacts, MLOps is the way out of the clutter for AI/ML-powered applications.

It is known to all that the evergreen DevOps methodology falls short here and thus, MLOps requires a different foundation. Every AI/ML application differs vastly from the next. So, the implementation of MLOps infrastructure varies. But the infrastructural layers remain the same.

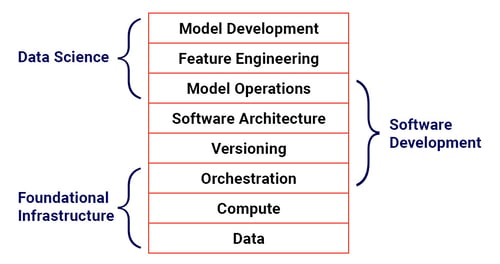

The MLOPs infrastructure is divided into 3 categories – the Data Science layer, the Software Development layer, and the Foundation Infrastructural layer.

Source: the book “Effective Data Science Infrastructure”

The implementation of the Foundational Infrastructure layers plays a significant role in the success of the application. Also, each category has layers overlapping with the next category in the MLOps infrastructure. This echoes the fundamentals of the DevOps methodology with a different approach.

MLOps programs today, like successful DevOps initiatives before them, help businesses bring different teams working on hard, innovative projects together. In the long term, organizations of all kinds are discovering that the control given by an effective MLOps approach may lead to more efficient, productive, accurate, and trusted models. It all comes down to completing some housework before diving into your coursework. Even if you're training a machine to do it, you can't get things done in a siloed mess. It's past time to recognize the value of effective machine learning.

Many organizations are already utilizing the capabilities of MLOps to improve the performance and longevity of their models. Furthermore, the organizations can share their knowledge and results in an open-source environment, allowing for faster dissemination and adoption of broader information about machine learning operations.

Aside from public transportation, MLOps has helped organizations in the sectors of healthcare, engineering, safety, and manufacturing improve their machine learning development procedures.

To understand the world of MLOps with detailed use cases and experience stories from industry leaders and learn how to build and implement your MLOps infrastructure the right way, join our Virtual Fireside Chat – “Achieve Autonomous AI for the Enterprise”, on 18th November 2021.

Panelists include Nick Patience - Founder & Research VP, 451 Research, Ravi Meduri – EVP, Innominds, and the virtual session is moderated by Sairam Vedam - CMO, Innominds.

To join the virtual Fireside chat:

-2.png?width=150&height=150&name=MicrosoftTeams-image%20(26)-2.png)